Improvements to OutcomeMaps This Year

My hobby project is a self-hosted grading/feedback platform for students to track progress on learning standards. I call it OutcomeMaps, mainly because standardmaps.com was already taken, and it is probably the second most-used site on my machine each day.

Everything we do in chemistry is linked to a speciifc learning outcome - usually a skill based on theory (describe the structure of the atom) or on computation (calculate the mass of product expected from these inputs). Every unit has two or three defined, each unit building on the previous, and students are graded on their proficiency on these standards. The problem is that I didn't have a great way to track progress on their learning.

So, I set out to build a little frontend for a database. It's simple in the sense that students are essentially read-only users and they can see a catalog of all feedback they've received through the year and a dashboard of their progress on any given standard. As the teacher, I can create/edit/score anything, but the main interaction layer is feedback on the skills.

The key is that it helps me make decisions and helps students see their opportunities for improvement. I don't think there's one way to quantify "proficient" but this system has helped me collect data which can then be used for plan for instruction and address emerging needs.

One thing I changed this year is to give binary scores to students. I started thinking about this switch back in March, mainly becuase my grading scheme at the time had areas of uncertainty in the scale that I wanted to eliminate. On paper, this turned into a 0-1-2 scale: no submission, improving, demonstrated. Students have been trained to glance at the number but, more importantly, pay attention to the written feedback. Based loosely on the single-point rubric, it does a better job of getting students to think about their thinking and not just about task completion.

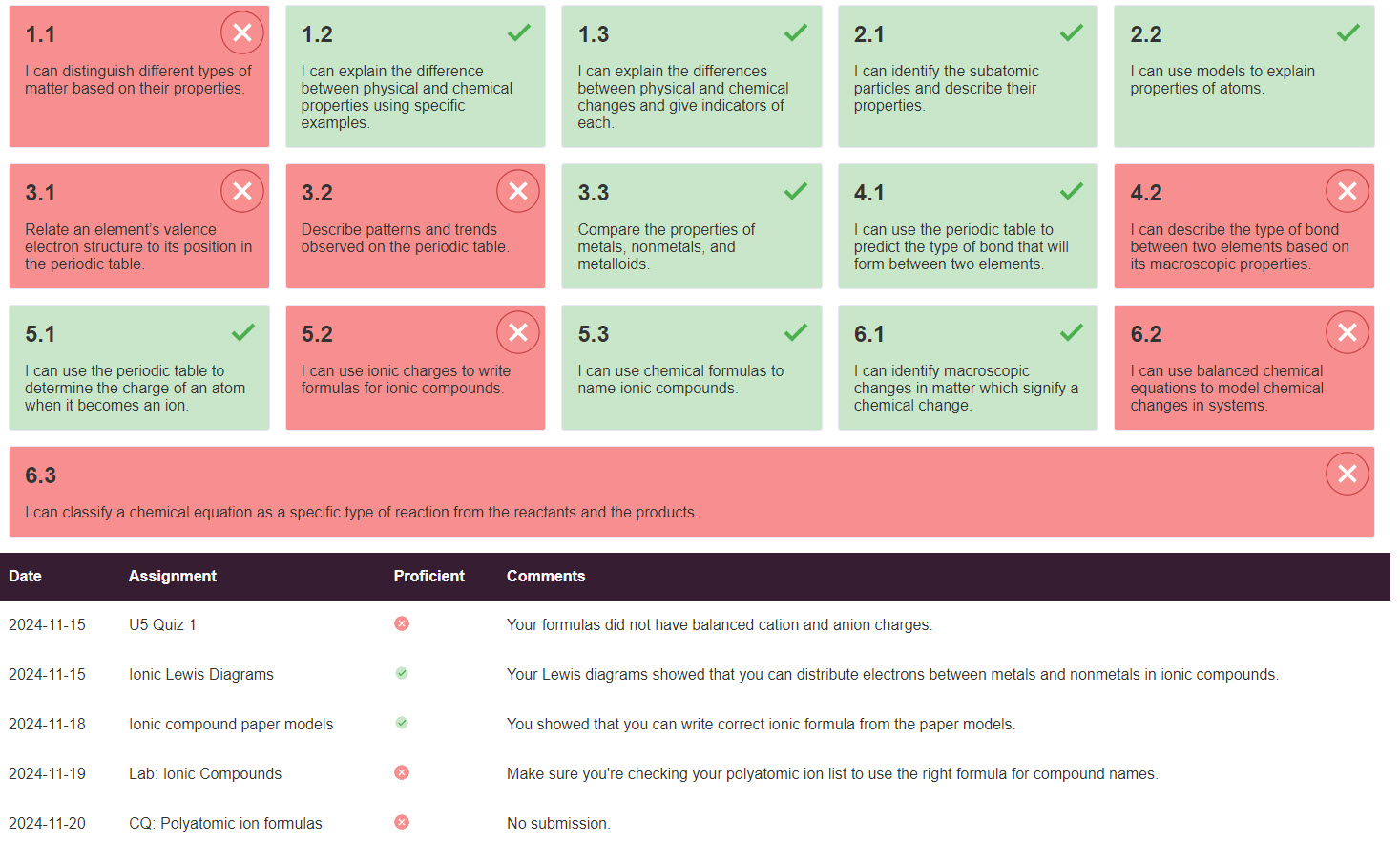

On the web, I turn that into a "check or x" - the UI doesn't actually show their score, just a checkmark or an "x" to note proficiency on that thing. The feedback is displayed right alongside each assignment's result so they can identify their own strengths and areas of improvements for each standard. The goal is to reach a check by the time the unit test rolls around.

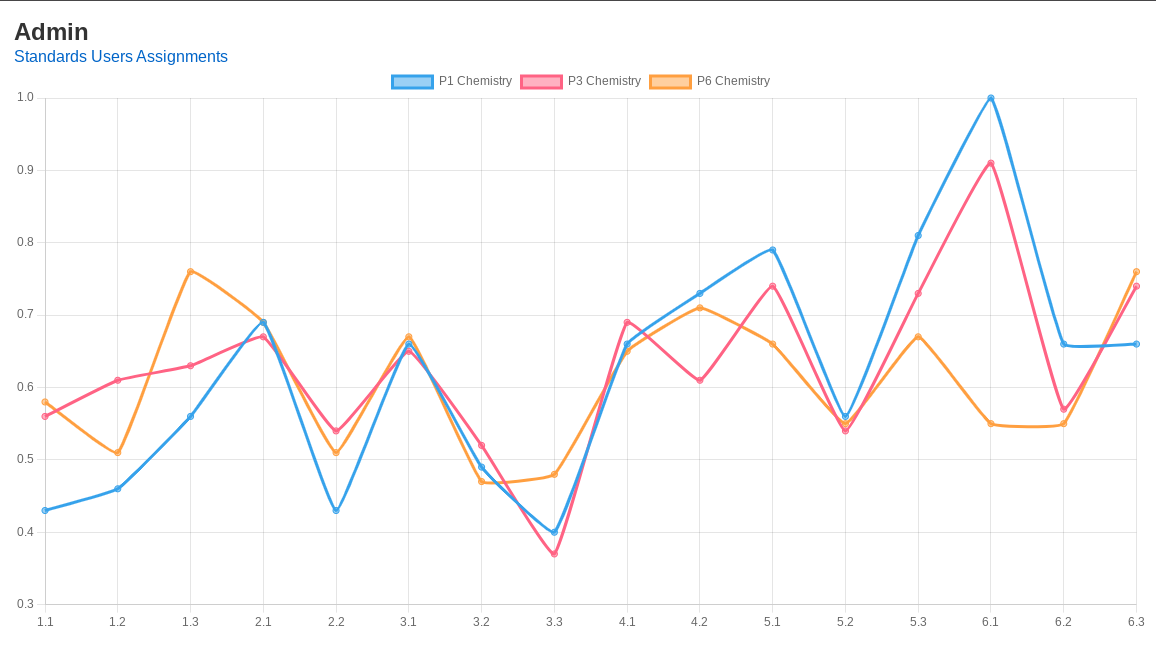

This year, I built out an admin panel with some simple charts that will help me - at a glance - look for patterns in each course.

At this point, I was calculating proficiency on the fly with each request because it was just one roster at a time (~25 students per class). This chart needed to generate three objets with data for each standard, so I was looping over each student multiple times. The chart took several seconds to load, which was less than ideal.

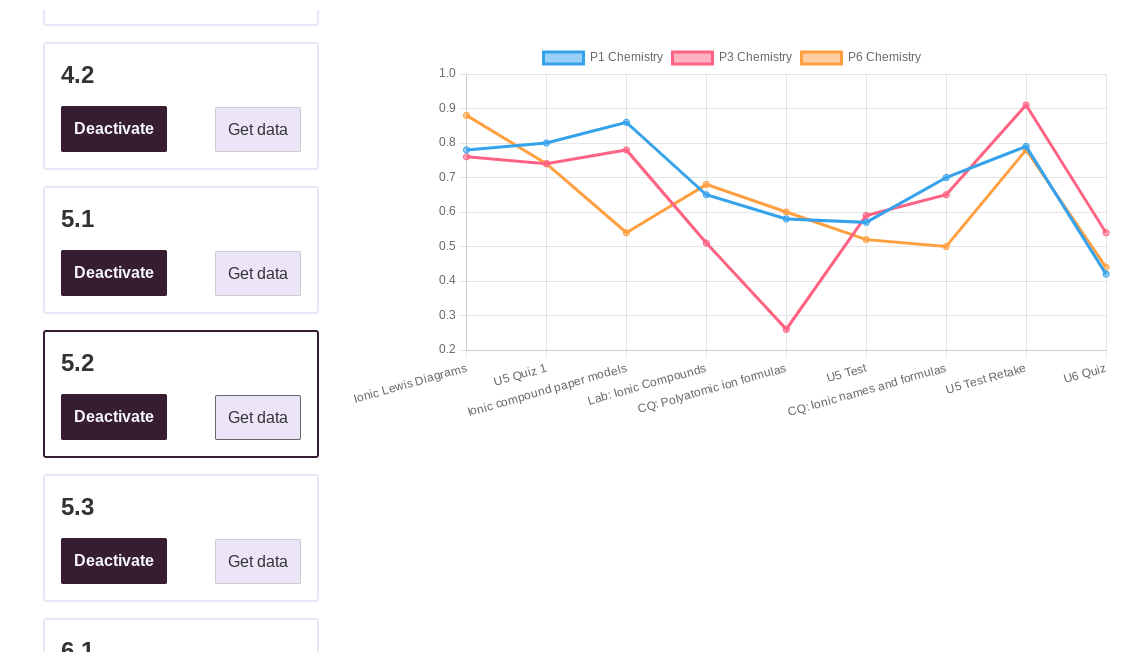

I did some work on the database to create a new association table that would record when a student was proficient - generally after taking a test - and I could store that record permanently. I also wrote in a method to override proficiency manually - the use case being that a student might come after school and do some one-on-one work that wasn't on a specific assignment. I can store that proficiency with a click instead of creating a new assignment for that one thing.

This let me query all of those records at once and cut down the load time by orders of magnitude. It also allowed me to set up per-standard comparison charts in the admin panel:

Now, as we're in the unit, I can look at the progress for each class on one screen. This used to be manual - I would look class by class and think over next steps. Now, I can reflect more on what I do differently from period to period and how that translates into success on the skills.

This year pushed me in terms of my coding ability. I've never been comfortable with database joins, but I'm using them all over the place now. I also have a better handle on how to set up models to get the data I want. I'm also coming up with ideas about how to help shepherd students through their own reflections on learning. One goal for this next year is to introduce tagging on standards ("theory" vs "computation", for example) which can help target even more interventions and study strategies for students.

Beyond that, I'm more strategic about my CSS and template structures. I've done a lot of maintenance work to clean up templates and I'm getting ready to tackle a full CSS cleanup (it's a hot steamy mess right now). It's by far my most long-lived project in terms of raw code written to do a thing (as opposed to raw words here) and I'm really proud of how far it has come from my really bad first couple of versions.

Comments